In this post we will derive a decentralised stability criterion for a class of structured dynamical models. This will form the basis of our modular approach to control system design for power systems. We will achieve this by taking a complex analysis twist on the eigenvalue problem, while producing some pretty pictures along the way!

This is the third post in the series — please see Part I for an overview, and Part II for an introduction to power system modelling if you didn’t already.

Overview

Stability is one of the most basic requirements of secure system operation, and captures the idea that the system will return to equilibrium (the operating point) if it is perturbed. The goal of this post is to develop modular stability conditions for systems with dynamics described by the state-space model

\[\dot{x}(t)=\mathbf{A}x(t)+\mathbf{B}u(t),\;x(0)\in\mathbb{R}^m.\]

We will refer to this model as the system model.

The modular stability criterion that we will develop applies when the \(\mathbf{A}\) and \(\mathbf{B}\) matrices in the system model have the special structure:

\[\mathbf{A}=\begin{bmatrix}\mathbf{A}_1\\&\ddots{}\\&&\mathbf{A}_n\end{bmatrix}-\underbrace{\begin{bmatrix}\mathbf{B}_1\\&\ddots{}\\&&\mathbf{B}_n\end{bmatrix}}_{\mathbf{B}}\mathbf{N}\begin{bmatrix}\mathbf{C}_1\\&\ddots{}\\&&\mathbf{C}_n\end{bmatrix},\]

where \(\mathbf{A}_i\in\mathbb{R}^{m_i\times{}m_i}\), \(\mathbf{B}_i\in\mathbb{R}^{m_i\times{}1}\), \(\mathbf{C}_i\in\mathbb{R}^{1\times{}m_i}\) and \(\mathbf{N}\in\mathbb{R}^{n\times{}n}\).

To make the mathematical claims in this post rigorous we need to assume that each pair \((\mathbf{A}_i,\mathbf{B}_i)\) is controllable and each pair \((\mathbf{A}_i,\mathbf{C}_i)\) is observable. I don’t want to go into this in too much detail here, but this is a very mild assumption that can be easily checked. In this post we need this assumption for two reasons:

- It guarantees that the eigenvalues of \(\mathbf{A}_i\) coincide with the poles of \[p_i(s)=\mathbf{C}_i(s\mathbf{I}+\mathbf{A}_i)^{-1}\mathbf{B}_i.\]This will allow us to divide through certain expressions using \(\det{(s\mathbf{I}-\mathbf{A}_i)}\) and form the transfer functions \(p_i(s)\) with impunity, since we can be sure that no pole-zero cancellations will occur.

- It guarantees that for all \(s\in\mathbb{C}\), \[\textrm{rank}\,\left(\begin{bmatrix}s\mathbf{I}-\mathbf{A}_i\\\mathbf{C}_i\end{bmatrix}\right)=m_i.\]This is known as the PBH (Popov–Belevitch–Hautus) observability condition. For our purposes the key implication of this expression is that it shows that if \(\mathbf{C}_iv=0\), then \((s\mathbf{I}-\mathbf{A}_i)v\neq{}0\).

I will point out where these technical points are required in the proofs. As a small aside, for the specific claims in the post the weaker requirements of stabilisability and detectability are enough. However I prefer the controllability and observability assumption since it ties the realisation of the state-space model for each component to its transfer function more concretely. This means that we could just as easily make claims about eigenvalue locations of the \(\mathbf{A}\) matrix on any subset of the complex plane, not just the right half-plane. This allows for analogous results for discrete time to be obtained immediately, and requirements involving system damping to be considered with no changes to the proofs (just replace right half-plane with whatever region you like in all the stability guarantees and modular tests below).

As we saw last time, this type of structure arises naturally when the system model has been modularly constructed from \(n\) components and a network. The matrices \(\mathbf{A}_i\) — \(\mathbf{C}_i\) come from the component models, and \(\mathbf{N}\) from the network model.

Stability has a sharp characterisation in terms of the matrix \(\mathbf{A}\). In particular the system model is stable if the eigenvalues of \(\mathbf{A}\) do not lie in the right half-plane.

The remainder of this post is devoted to developing a pass-fail test that can be applied to the matrices \(\mathbf{A}_i\) — \(\mathbf{C}_i\). This test has the following key property. If for each \(i\in\{1,\ldots{},n\}\):

the test on the matrices \(\mathbf{A}_i\) — \(\mathbf{C}_i\) is passed

— the modular test

then:

The matrix \(\mathbf{A}\) in the system model has no eigenvalues in the right half-plane for a class of network models.

— the stability guarantee

Forcing components to pass the modular test gives a simple, provable, and scalable way to control large interconnected systems, with the following key benefits:

- Components can be freely added and removed without compromising stability.

- It is inherently robust since stability is guaranteed for a class of network models.

- It is simple to design for, since local controllers can be designed to meet the modular test based entirely on local modelling information.

At a conceptual level, this overcomes all the control challenges posed in Part I. We will see how to apply the tests developed today to the power system models from the last post next time!

The simplest test — only one component

In order to introduce the relevant concepts, let us first deal with the simplest case — when there is only one component. In this case the network model \(\mathbf{N}\) is simply a constant number, and \(\mathbf{A}=\mathbf{A}_1-\mathbf{B}_1\mathbf{N}\mathbf{C}_1\). We will show how to develop a test that only depends on the component model that has the following stability guarantee:

The matrix \(\mathbf{A}\) in the system model has no eigenvalues in the right half-plane for all network models with \[0<\mathbf{N}\leq{}1.\]— stability guarantee (1)

To proceed, let us introduce the complex variable \(s\) (this is just a variable that can take on complex number values — unlike in mathematics, in the control literature it is not common to use the variable \(z\) for this purpose unless the system model is in discrete time). The modular test that we will develop will be written in terms of the complex function

\[p_1(s)=\mathbf{C}_1\left(s\mathbf{I}-\mathbf{A}_1\right)^{-1}\mathbf{B}_1.\]

This will always be the ratio of two polynomials with real coefficients. For example, if

\[\mathbf{A}_1=\begin{bmatrix}-2&0\\1&0\end{bmatrix},\,\mathbf{B}_1=\begin{bmatrix}1\\0\end{bmatrix},\,\mathbf{C}_1=\begin{bmatrix}1&1\end{bmatrix},\]

then

\[p_1(s)=\frac{s+1}{s\,(s+2)}.\]

This complex function is typically referred to as a transfer function. We will now show that the following modular test provides the stability gurantee.

For all \(s\) in the right half-plane,

\[p_1(s)\notin{}(-\infty,-1].\]— modular test (1)

Although this test might look intimidating and unintuitive, it really isn’t. If you’ve done a basic control course, you know at least two ways to check and understand this test — using root locus or Nyquist diagrams. However I’m going to assume that you know neither. In the next section I will give you the visual intuition for this condition using some beautiful tools from complex analysis.

However first let us establish that this modular test really does provide the stability guarantee — feel free to skip ahead if you’re not interested in the details. Recall that \(\det{\left(s\mathbf{I}-\mathbf{A}\right)}=0\) if and only if \(s\) is equal to an eigenvalue of \(\mathbf{A}\). Substituting in for \(\mathbf{A}\) shows that this condition is equivalent to:

\[\det{\left(\left(s\mathbf{I}-\mathbf{A}_1\right)+\mathbf{B}_1\mathbf{N}\mathbf{C}_1\right)}=0.\]

By the matrix determinant lemma,

\[\det{\left(\left(s\mathbf{I}-\mathbf{A}_1\right)+\mathbf{B}_1\mathbf{N}\mathbf{C}_1\right)}=\det\left(s\mathbf{I}-\mathbf{A}_1\right)+\mathbf{N}\mathbf{C}_1\text{adj}\,{\left(s\mathbf{I}-\mathbf{A}_1\right)}\mathbf{B}_1.\]

This shows that \(s\) is an eigenvalue of \(\mathbf{A}\) if and only if [this actually needs technical assumption 1]

\[\frac{\mathbf{C}_1\text{adj}\,{\left(s\mathbf{I}-\mathbf{A}_1\right)}\mathbf{B}_1}{\det\left(s\mathbf{I}-\mathbf{A}_1\right)}=-\frac{1}{\mathbf{N}}.\]

Note that the term on the left-hand-side of the above is just a fancy way of writing of writing \(p_1(s)\), since

\[(s\mathbf{I}-\mathbf{A})^{-1}=\frac{\text{adj}\,\left(s\mathbf{I}-\mathbf{A}_1\right)}{\det\left(s\mathbf{I}-\mathbf{A}_1\right)}.\]

Therefore we have established that \(s\) is an eigenvalue of \(\mathbf{A}\) if and only if \(p_1(s)=-1/\mathbf{N}\). Now observe that

\[0<\mathbf{N}\leq{}1\,\Longleftrightarrow{}\,-1/\mathbf{N}\in(-\infty{},-1].\]

Therefore satisfying the modular test guarantees that \(p_1(s)\neq{}-1/\mathbf{N}\) for every \(s\) in the right half-plane and every \(0<\mathbf{N}\leq{}1\). This means that the eigenvalues of \(\mathbf{A}\) cannot lie in the right half-plane, which is precisely the stability guarantee.

Checking the modular test using domain colouring

Arguably the most common method for visualising complex functions is domain colouring. In this method, each input \(s\) of the function

\[w=p_1(s)\]

is assigned a colour depending on the output of the function (the value of \(w\)).

Tal Brenev has developed a nice online tool for visualising domain colourings that can be found here, though beware, the colour map he used is not the same as in the above. Alternatively software packages in many languages are available for producing plots — I have used Elias Wegart‘s tools to produce these pictures, so many thanks to him! If you’re interested in learning more about domain colourings I also recommend checking out Hans Lundmark‘s page on complex analysis, which includes links to many other resources.

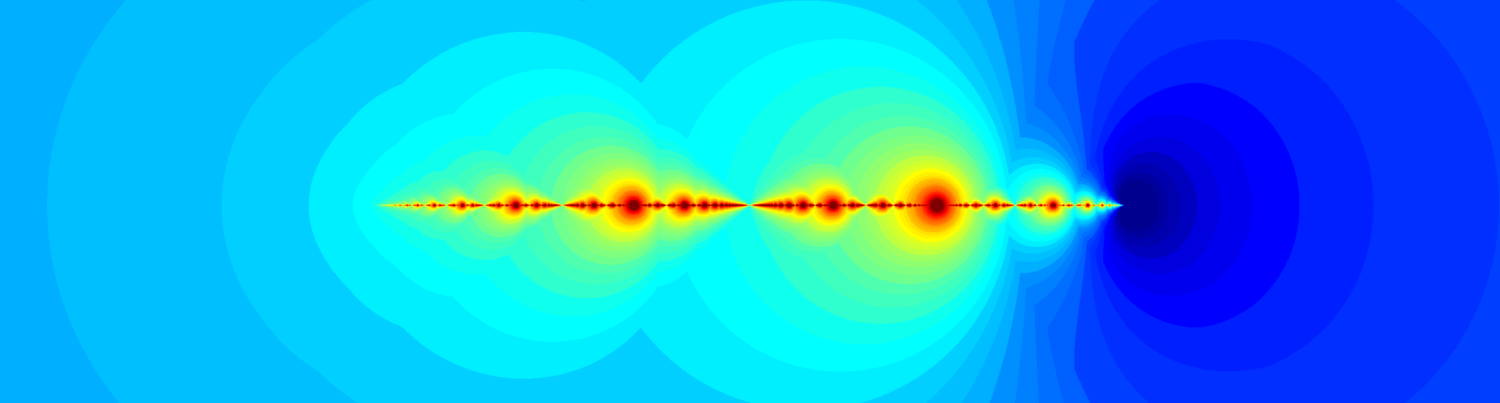

The reason for using domain colouring is that it allows us to quickly see where values in the \(s\)-plane (the domain of the function) are mapped by the function. For example, in the above we see that no values of \(s\) in the right half plane are mapped onto the negative real numbers. This is because the light blue area (which is associated with negative real numbers in the output space) does not appear at all in the right half-plane of the domain colouring.

So how does this help? Well remember that we want to check whether \(p_1(s)\) lies in the interval \((-\infty,-1]\) for values of \(s\) in the right half-plane. Our observation about where the blue region was mapped shows that for every value of \(s\) in the right half-plane, \(p_1(s)\notin(-\infty,0]\). Therefore we have developed a graphical sufficient condition for passing our modular test!

So how can we refine this approach? Well, although the colouring of the \(w\)-plane that we used is extremely aesthetically pleasing, it isn’t the most effective for checking our modular test. A more direct approach would be to use the more mundane colouring of the output space:

Here we have only coloured the interval \((-\infty,-1]\). While more dull to look at, this gives us a very direct way to check the modular test:

The function \(p_1(s)\) satisfies modular test (1) if and only if the coloured region in this domain colouring lies in the left half-plane.

In the control literature, this type of plot is referred to as a root locus diagram (note it is not the whole root locus, since we haven’t included the interval \([-1,0]\) in the \(w\)-plane). There exist simple graphical rules as well as software implementations for drawing these plots, though a discussion of this will have to wait for another time!

An extension to any number of components

We will now develop a modular test that works no matter how many components we have. It provides the following stability guarantee:

The matrix \(\mathbf{A}\) in the system model has no eigenvalues in the right half-plane for all network models that satisfy:

\(1.\) \(\mathbf{N}=\mathbf{N}^T\) — this means that \(\mathbf{N}\) is a symmetric matrix.

— stability guarantee (2)

\(2.\) The smallest eigenvalue of \(\mathbf{N}\) is greater than zero, and the largest is less than or equal to one.

The class of network models might look a bit abstract for now, but is really just a matrix version of the single component class. It actually gives a rather compact description of the transmission network models that we saw in the previous post. We will go into this in more detail in the next time.

Just as in the one component case, the test for each component can be written in terms of the transfer function

\[p_i(s)=\mathbf{C}_i\left(s\mathbf{I}-\mathbf{A}_i\right)^{-1}\mathbf{B}_i.\]

The following modular test provides the stability guarantee:

For all \(s\) in the right half-plane

\[\mathrm{Re}\left(p_i(s)\right)>-1.\]— modular test (2)

This test is very similar to the one component test. In the subsequent sections we will first show how to check it using the domain colouring techique. We will then derive the result, and give an extension of the modular test that gives us a lot more flexibility.

Checking the second modular test

The test is actually very similar to the one component case. However this time the critical region in the output space is

\[\text{Re}(w)\leq{}-1.\]

This corresponds to the half-plane to the left of the \(-1\) point in the \(w\)-plane. This means that just like before we can use a domain colouring to check this condition.

This gives us an analogous graphical condition for checking the modular test:

The function \(p_i(s)\) satisfies modular test (2) if and only if the coloured region in this domain colouring lies in the left half-plane.

And that’s all there is to it! This simple modular test on the component transfer function can be used as a basis for modular control system design.

It is worth pointing out how similar this is to the one component case. Look at the thick white line in the domain colouring above. This is the mapping of the interval \((-\infty,-1]\), which corresponds precisely to the colouring for modular test (1). The red area enlarges the colouring, but not too much, with the thick white line forming a kind of spine. This gives us a hint about how to design local controllers so that modular test (2) is passed. If we can work out how to manipulate the spine to lie in the left-half plane, with a bit of luck the red region will follow. We will come back to this idea in a later post — however if you do have a background in control you already know what to do. This is because designing for a `good spine’ is equivalent to designing for a large gain margin (Hint for later: Bode’s ideal transfer function!).

Before moving on, we note that there are several other ways to check modular test (2), using:

- Nyquist diagrams.

- The Kalman–Yakubovich–Popov lemma.

- Circuit theory.

These are all discussed in the paper. It is also possible to check modular test (2) by plotting the domain colouring of \(1+p_i(s)\) using the standard colour map. This allows existing tools to be used to check modular test (2) without writing new software to handle custom colour maps.

Why does modular test (2) work?

In this section we will prove that modular test (2) provides stability guarantee (2). Again, feel free to skip ahead if you’re not interested in the gory details. We will use a slightly different approach than before and use proof by contradiction. First let us establish the key implication (for our proof) of modular test (2).

Satisfying the modular test implies that for every \(s\) in the right half-plane, \(\textrm{Re}\,(p_i(s))>-1\). This implies that given any non-zero vector \(z\in\mathbb{C}^n\),

\[\textrm{Re}\left(\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}\right)>-\frac{\sum_{i=1}^nz_i^*z_i}{z^*z}=-1.\]

Now consider

\[\frac{z^*\mathbf{N}^{-1}z}{z^*z}.\]

This is called the Rayleigh quotient of the matrix \(\mathbf{N}^{-1}\). For symmetric matrices the Rayleigh quotient is a real number that lies between the smallest and largest eigenvalue of the matrix. This means that for every network model in the class in stability guarantee (2):

\[\textrm{Re}\left(\frac{-z^*\mathbf{N}^{-1}z}{z^*z}\right)\leq{}-1.\]

Putting these two observations together shows that for every network in the class in stability guarantee (2), modular test (2) implies that for every \(s\) in the right half-plane and every non-zero \(z\in\mathbb{C}^n\),

\[\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}\neq{}\frac{-z^*\mathbf{N}^{-1}z}{z^*z}.\]

We will now use this to establish a contradiction. Let us assume that the claim of the stability guarantee is false, and that for some network model in the class, the matrix \(\mathbf{A}\) has an eigenvalue in the right half-plane. That is, for some \(s\) in the right half-plane, there exists a \(v\in\mathbb{C}^m\) that satisfies the eigenvalue equation

\[\mathbf{A}v=sv.\]

Remember that

\[\mathbf{A}=\underbrace{\begin{bmatrix}\mathbf{A}_1\\&\ddots{}\\&&\mathbf{A}_n\end{bmatrix}}_{\mathbf{\bar{A}}}-\underbrace{\begin{bmatrix}\mathbf{B}_1\\&\ddots{}\\&&\mathbf{B}_n\end{bmatrix}}_{\mathbf{B}}\mathbf{N}\underbrace{\begin{bmatrix}\mathbf{C}_1\\&\ddots{}\\&&\mathbf{C}_n\end{bmatrix}}_{\mathbf{C}}.\]

Substituting in for \(\mathbf{A}\) and rearranging slightly gives

\[\mathbf{B}\mathbf{N}\mathbf{C}v=-\left(s\mathbf{I}-\mathbf{\bar{A}}\right)v.\]

By [technical assumption 2], this implies that \(\mathbf{C}v\neq{}0\). Multiplying both sides by \(\mathbf{C}\textrm{adj}\,\left(s\mathbf{I}-\mathbf{\bar{A}}\right)\) shows that this implies that

\[\mathbf{C}\text{adj}\,\left(s\mathbf{I}-\mathbf{\bar{A}}\right)\mathbf{B}\mathbf{N}\mathbf{C}v=-\det{\left(s\mathbf{I}-\mathbf{\bar{A}}\right)}\mathbf{C}v.\]

Now let \(z=\mathbf{N}\mathbf{C}v\). Since the matrix \(\mathbf{N}\) is invertible for every network in the network class, this implies that \(z\neq{}0\) and

\[\mathbf{C}\text{adj}\,\left(s\mathbf{I}-\mathbf{\bar{A}}\right)\mathbf{B}z=-\det{\left(s\mathbf{I}-\mathbf{\bar{A}}\right)}\mathbf{N}^{-1}z.\]

This implies that [this step needs technical assumption 1]

\[\frac{\mathbf{C}\text{adj}\,\left(s\mathbf{I}-\mathbf{\bar{A}}\right)\mathbf{B}}{\det{\left(s\mathbf{I}-\mathbf{\bar{A}}\right)}}z=-\mathbf{N}^{-1}z.\]

Now because the matrices \(\mathbf{\bar{A}},\mathbf{B}\) and \(\mathbf{C}\) are block diagonal, the above simplifies, to

\[\begin{bmatrix}p_1(s)z_1\\\vdots{}\\p_n(s)z_n\end{bmatrix}=-\mathbf{N}^{-1}z.\]

Multiplying on the left by \(z^*\) and dividing through by \(z^*z\) shows that this implies that

\[\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}=\frac{-z^*\mathbf{N}^{-1}z}{z^*z}.\]

And this is our contradiction! Therefore given any \(s\) in the right half-plane there exists no vector \(v\) satisfying the eigenvalue equation for \(\mathbf{A}\). Hence \(\mathbf{A}\) has no eigenvalues in the right half-plane, which is precisely the claim in stability guarantee (2).

More modular tests

Modular test (2) is the first of an entire family of modular tests that provide stability guarantee (2). In this section we will describe this family. This class gives us considerable extra flexibility to tailor the modular test to match the dynamics of the components and the control systems we design. To really understand how to exploit this flexibility requires and understanding of how to design controllers to meet the modular tests. We will do this in future posts — the presentation below just focuses on illustrating these tests, and what they mean mathematically.

Conceptually every test in this family works just like modular test (2). To introduce them, we need the notion of a positive real function.

A function \(h(s)\) is positive real if it is real when \(s\) is real, and it has positive real part when \(s\) has positive real part.

While this definition may seem like a bit of a mouth full, for any rational function with real coefficients all it means is that for every \(s\) in the right half-plane, \(h(s)\) is also in the right half-plane. This means that we can also characterise this class of functions using domain colourings.

A function \(h(s)\) is positive real if the coloured region in this domain colouring lies in the left half-plane.

The positive real functions can be used to come up with the following generalisation of modular test (2):

For all \(s\) in the right half-plane

\[\mathrm{Re}\left(h(s)(p_i(s)+1)\right)>0,\]

where \(h(s)\) is positive real.

— generalised modular test (2)

If every component satisfies generalised modular test (2) then we also obtain stability guarantee (2). In fact modular test (2) simply corresponds to the case \(h(s)=1\).

Just as with modular test (2), any of these generalisations can be checked by plotting a domain colouring.

The function \(p_i(s)\) satisfies generalised modular test (2) if and only if the coloured region in this domain colouring of \(h(s)(1+p_i(s))\) lies in the left half-plane.

The extra flexibility in generalised modular test (2) can be exploited to tailor the tests to particular types of functions. We will return to this in future posts, but to illustrate this, suppose that

\[p_i(s)=\frac{5}{(s+1)^3}.\]

This function fails modular test (2).

\[h(s)=\frac{s+1}{s+5}.\]

This time the test is passed.

However for a judicious choice of \(h(s)\) the same function passes generalised modular test (2). In fact (though we will not prove this here), given any function that passes modular test (1), there is guaranteed to exist an \(h(s)\) such that it also passes generalised modular test (2).

Observe that this test is very similar to checking whether \(h(s)(1+p_i(s))\) is positive real — the only difference is that the domain colouring must lie strictly in the left half-plane. This actually firmly ties these modular tests to some very powerful tools in control theory, including \(\mathscr{H}_\infty\) methods. This is discussed in the paper.

So why do these more general tests work? The reason can be seen from the proof of modular test (2). In short, analogous arguments show that if generalised modular test (2) holds, then for every \(s\) in the right half-plane and every non-zero \(z\in\mathbb{C}^n\),

\[\textrm{Re}\left(\frac{\sum_{i=1}^nz_i^*z_ih(s)p_i(s)}{z^*z}\right)>-\frac{\sum_{i=1}^nz_i^*z_i\textrm{Re}\left(h(s)\right)}{z^*z}=-\textrm{Re}\left(h(s)\right).\]

Similarly for every \(\mathbf{N}\) in the network class

\[\textrm{Re}\left(\frac{-z^*h(s)\mathbf{N}^{-1}z}{z^*z}\right)\leq{}-\textrm{Re}\left(h(s)\right).\]

Together these also establish that

\[\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}\neq{}\frac{-z^*\mathbf{N}^{-1}z}{z^*z}.\]

Stability guarnatee (2) then follows using exactly the same contradiction argument.

More stability guarantees

Generalised modular test (2) also provides more stability guarantees than just stability guarantee (2). In particular it also provides:

The matrix \(\mathbf{A}\) in the system model has no eigenvalues in the right half-plane, except possibly for some simple eigenvalues on the imaginary axis, for all network models that satisfy:

\(1.\) \(\mathbf{N}=\mathbf{N}^T\) — this means that \(\mathbf{N}\) is a symmetric matrix.

— modified stability guarantee (2)

\(2.\) The eigenvalues of \(\mathbf{N}\) are all greater than zero and less than or equal to one, except of a single eigenvalue that equals zero.

The main differences between this and stability guarantee (2) are:

- It allows for network models with a zero eigenvalue.

- It cannot guarantee that the \(\mathbf{A}\) matrix does not have simple eigenvalues on the imaginary axis.

Point one is important for the power system application because the transmission network model will always have a zero eigenvalue. Therefore we need a broader network class than in stability guarantee (2) to be get useful modular guarantees for that application. Point 2 slightly modifies the interpretation of `stability’ of the system model. This type of stability is typically referred to as marginal stability. The difference is rather subtle and not worth worrying about too much. Especially since — as we will show below — \(\mathbf{A}\) has imaginary axis eigenvalues only if \(\bar{\mathbf{A}}\) does. This means that if we design suitable local stabilising controllers to remove these, \(\mathbf{A}\) will also have no imaginary axis eigenvalues.

Why do we get also get modified stability guarantee (2)?

We will now explain why generalised modular test (2) provides modified stability guarantee (2). Beware though, things are about to get quite involved — only proceed if you are very interested.

First let us understand the difficulty caused by the zero eigenvalue. The issue that is that if the matrix \(\mathbf{N}\) has a zero eigenvalue, it is not invertible. In the proof of modular test (2) we have used this property to both set up our key insight, and establish our contradiction. First let us modify these statements so that we don’t require any inverses. This can be done by introducing an extra variable

\[z=\mathbf{N}y.\]

Doing so allows us to rewrite our key insight as

\[\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}\neq{}\frac{-y^*\mathbf{N}y}{y^*\mathbf{N}^2y}.\]

However unlike before, this statement is only valid if \(\mathbf{N}y\neq{}0\). However if we add this to our assumptions, we can still obtain a contradiction using the same method as last time. In particular, if we additionally assume that \(\mathbf{N}\mathbf{C}v\neq{}0\), exactly the same argument as before shows that

\[\frac{\sum_{i=1}^nz_i^*z_ip_i(s)}{z^*z}=\frac{-y^*\mathbf{N}y}{y^*\mathbf{N}^2y}.\]

This contradicts our modified statement, and means that satisfying generalised modular test (2) implies that no \(s\) in the right half-plane can be an eigenvalue of \(\mathbf{A}\) with an eigenvector \(v\) that satisfies \(\mathbf{N}\mathbf{C}v\neq{}0\).

Therefore we only need to understand what happens \(\mathbf{N}\mathbf{C}v=0\). This is most easily done by looking directly at the eigenvalue problem. As shown before, this can be written as

\[\mathbf{B}\mathbf{N}\mathbf{C}v=-(s\mathbf{I}-\bar{\mathbf{A}})v.\]

From this we see that if \(\mathbf{N}\mathbf{C}v=0\), then for the above to hold \(s\) must be an eigenvalue of \(\bar{\mathbf{A}}\). Therefore we can strengthen our claim to be: \(\mathbf{A}\) has no eigenvalues in the right half-plane, except possibly at points where \(s\) is equal to an eigenvalue of \(\bar{\mathbf{A}}\).

We are now very close to demonstrating generalised stability guarantee (2). The missing piece is to show that if \(\bar{\mathbf{A}}\) has an eigenvalue in the open right half-plane or a non-simple eigenvalue on the imaginary axis, then at least one function will fail generalised modular test (2).

The first thing to notice is that [technical assumption 1] implies that each eigenvalue of \(\bar{\mathbf{A}}\) is equal to a pole of one of the complex functions \(p_i(s)\). To proceed requires a little more complex analysis. The key insight is to imagine where a small loop drawn around any right half-plane poles of \(p_i(s)\) in the \(s\)-plane will be mapped by the function \(h(s)(1+p_i(s))\).

Since the right half-plane poles of \(p_i(s)\) and \(h(s)(1+p_i(s))\) are the same, a consequence of Cauchy’s argument principle is that this small loop will be mapped into a curve in the \(w\)-plane that encircle the origin \(x\) times, where \(x\) is the degree of the pole. Since this curve is encircling the origin, part of it must lie in the right half-plane of the \(w\)-plane. This means that some point in the domain colouring will be mapped into the right half-plane, and hence \(p_i(s)\) cannot satisfy generalised modular test (2).

A slight modification of this argument can also be used to rule out non-simple imaginary axis poles. Therefore the only eigenvalues of \(\bar{\mathbf{A}}\) that remain are simple imaginary axis eigenvalues, and we have obtained modified stability guarantee (2)! We can take this even further, and characterise exactly when \(\mathbf{A}\) will have imaginary axis eigenvalues — it turns out that in the power system case (this needs extra properties of Laplacian matrices) this can happen if and only if every \(\mathbf{A}_i\) has an imaginary axis eigenvalue in exactly the same location. This post is already too long, so we won’t prove this here. My hint for those who want to fill in the details themselves is to use the Perron Frobenius theorem!

2 Responses

[…] Part III: Derive a decentralised stability criterion; […]

[…] that’s all there is to it! This minor modification of modified modular test (2) will provide power system stability guarantee. This test can be understood and checked with the […]